Identifying points of influence and their impact on AI initiatives

This article is part 2 of a series on AI product management.

The first article examined the different pathways that might have led you to consider an AI-driven product initiative. You can read it here, if you haven’t already:

Orientation points

Embarking on an AI-driven product journey involves more than simply determining how to leverage Large Language Models within your product. As a product leader, you are part of a larger ecosystem that encompasses your product, executive leadership, the broader organisation, and, most crucially, current and prospective customers.

Each of these serves as a vital reference point for evaluating whether your product and company are genuinely prepared for AI-driven features, or if more foundational work is required before proceeding.

The success of your AI initiative will hinge on your current situation with respect to each of these key points of influence and the necessary actions to ensure that your AI initiative does not end up in the AI-experiment-cemetery (commonly referred to as the place where poorly scoped POCs go to vanish - or, more colloquially within some organisations, “Phase 2”).

In this article, we will outline these key points of orientation (and influence) and present a framework for assessing the current state of readiness regarding your ecosystem's AI capabilities.

Seats at the table and the people in them

A system is only as efficient as the sum of its parts. One of the key components of the organisational system is your executive leadership team.

It is crucial to note that if alignment does not exist among some of these key players involved in your AI journey, the road from concept to production will be long, challenging, and fraught with difficulties. Misalignment at the leadership level will inevitably lead to delays, underfunding, or the AI solution failing to reach the market. Therefore, it is essential to foster this alignment among the executive leadership from day one.

To best equip the executive leadership team with the necessary knowledge and insights, it is vital to evaluate their current understanding, both individually and collectively, regarding AI.

It is therefore important to grasp where each member of the leadership team stands across various dimensions:

AI Literacy: how well do they understand the mechanics, benefits, and risks of AI, Generative AI, Large Language Models, and so forth?

Willingness to invest in AI: how much time and resources are they prepared to allocate to AI initiatives? How well do they comprehend the potential gains from investing in AI activities?

Appetite for risk: how willing are key stakeholders to experiment and accept uncertainties related to AI outcomes and outputs?

Integration with business strategy: how prepared are they to make a strategically significant investment in AI initiatives as part of their business strategy?

The different elements when it comes to AI readiness in leadership teams can be assessed separately but are also very much intertwined when it comes to the decision-making process.

Let’s look at AI Literacy as an example. If someone in the leadership scores ‘Low’ on AI Literacy, their appetite for risk related to AI solutions might be dangerously high, as they have very little knowledge of the actual risks the organisation might be exposing itself to. ‘Advanced’ AI literacy might, on the contrary, have an adverse effect on the appetite for risk.

However, the current state of play is not set in stone - it is first and foremost a good indicator of the next steps to take.

Here are a few examples:

If AI Literacy amongst your executive leadership is overall low, you will need to invest some time in getting everyone up to speed before pitching the details of your ROI-changing AI initiative.

There is no such thing as the unknown, only things temporarily hidden.

(Captain Kirk, Star Trek)

If the Appetite for Risk is quite low, you will need to think of your AI roadmap in more incremental steps, emphasizing incremental value and efficient safety guardrails along the way.

The wider organisation

Beyond the executive leadership, pushback and pull for an AI initiative also come from the broader organisation and its members. If nothing else, AI is a subject matter that brings about highly passionate debates and emotional responses, ranging from colleagues wanting to overhaul the entire product to embrace AI, to those who fear they will be replaced by AI functionality.

If there has ever been a catalyst for change management adoption in organisations, then AI definitely is one.

Working with a telco organisation on the verge of swapping its old IVR system for an AI-powered phone assistant, one of the team members was particularly disruptive throughout the product discovery process. After talking with them a bit more in-depth, it turned out that after 20 years of doing things a certain way, the fear of change was what was standing in the way of embracing this new solution.

No matter how elated you are about adopting AI in your organisation and product, this might not be the case for everyone involved. The reasons behind their reluctance or shared enthusiasm can be mapped back to a few things :

Willingness to embrace AI : how excited or reluctant are colleagues about the opportunities AI presents?

AI Literacy: to what extent do individuals across different levels of the organisation understand AI, its capabilities, and its limitations?

AI Talent and skills: does the organisation have the right expertise internally to implement and manage AI-driven solutions?

Willingness to learn and upskill: how motivated are colleagues to enhance their skills in AI?

Ethical readiness: is the organisation prepared to address the ethical implications of the use of AI? Do employees and leadership teams understand AI biases, fairness concerns? Are guidelines in place for AI usage?

Competitive landscape: how does the organisation perceive AI in the wider market context? What is the level of competitive pressure when it comes to leveraging AI?

Your product

Your existing product is the foundation upon which you will build your AI functionality, both from a technical as a user experience perspective.

As with all foundations, if they are shaky…all you are building is a shaky Jenga tower that will fall with the first winds.

Technical foundations: How stable is your core product foundation really?

Data structure & quality: How well structured is your data? Is the quality of your data on par with what is required for AI use?

Infrastructure: How maintained and sustainable is your infrastructure at scale?

Readiness to integrate AI: How ready is your product to manage the added load, real-time processing, and potential integration with third-party AI services?

Performance of key functionality: How stable, fast and reliable are core functionalities of the product under heavy use?

Let’s say you want to provide users with the ability to interact conversationally with your product documentation, using semantic classification and retrieval augmented generation(RAG). If your documentation is well-structured, has a clear information architecture, and everyone across the organisation uses a standardised structure for writing documentation, chances are that creating a Conversational AI Agent for users to ask questions and get valuable answers supporting them in their journey with your product are high. On the other hand, if your documentation is a hot mess, unstructured and everyone writes document features willy-nilly… the well-known saying holds true: garbage in, garbage out!

I’m sorry to break the news to you: AI will not fix a broken user experience. Well, I sincerely hope I am not the one breaking this news to you - because if I am, we’ll need to have a bigger conversation than this article can contain.

AI and UX are, in effect, strategic allies - not just a patch to each other’s wounds.

AI can enhance a product’s user experience in several ways, just as UX considerations can considerably improve AI functionality.

To consider where AI can genuinely benefit your user experience, you must evaluate its current state first.

To do so, you need to take a close look at the following key considerations for your product’s user experience:

Findability: how easily can users locate your product and the features they need within it?

Discoverability: how well or poorly are users recognizing, understanding and using your product’s core functionalities?

Learnability: how easy or difficult is it for users to learn how to make the best of your product’s core functionality?

Usability: how easy and efficient is it for your users to accomplish the core job of using your product?

Accessibility: how usable is your product and its features for users of all abilities?

Many frameworks and user research methodologies exist to assess these elements: Nielsen’s usability heuristics, WCAG Accessibility checkers, UserIndex score, to name a few.

Your users: the cornerstone of your success

Build it, and they will come...unless they aren’t looking in the first place!

Some instrumental pieces of information you need when considering an AI initiative are:

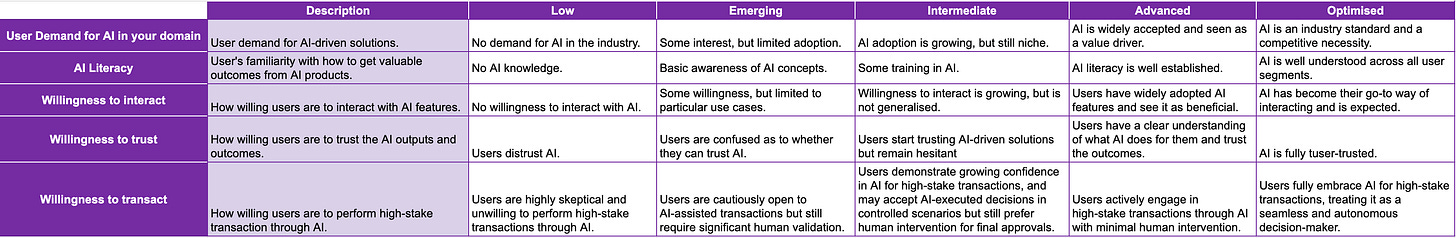

User demand for AI in your domain: is your target audience actively looking for AI solutions? Is AI even on their radar?

User’s AI Literacy: how well do users from your target audience understand how to use AI features?

Willingness to interact: how willing are your users to interact with AI-driven features?

Willingness to trust: how willing are your users to trust AI outputs and outcomes?

Willingness to transact: how willing are your users to perform high-stakes transactions with AI?

While users might feel comfortable using applications such as ChatGPT to generate content for an email they want to write, for example, they might be more reluctant to give an AI Agent direct access to their email client to send generated emails directly on their behalf.

What to make of it all

Now, all of this raises a lot of questions without necessarily providing specific answers. I wish I could give you a shortcut for doing all this foundational work, but the truth is that where you end up with this orientation grid highly depends on the ecosystem you work within.

Ready to assess your AI Initiative Readiness?

Most AI projects fail not because of technology — but because of poor foundational considerations.

With this in-depth assessment in hand, you are uniquely equipped to map out the pathway to an adopted, high-value AI initiative, including the hills, pitfalls, and storms you might need to weather along the way.

Addressing concerns, fostering education, and ensuring strategic alignment will play a crucial role in successfully embedding AI into the company’s culture and operations.

Join the AI Product Focus tribe

You can expect AI Product Focus to appear regularly with articles including opinion pieces, a practical AI Product Building series, research, frameworks and more.